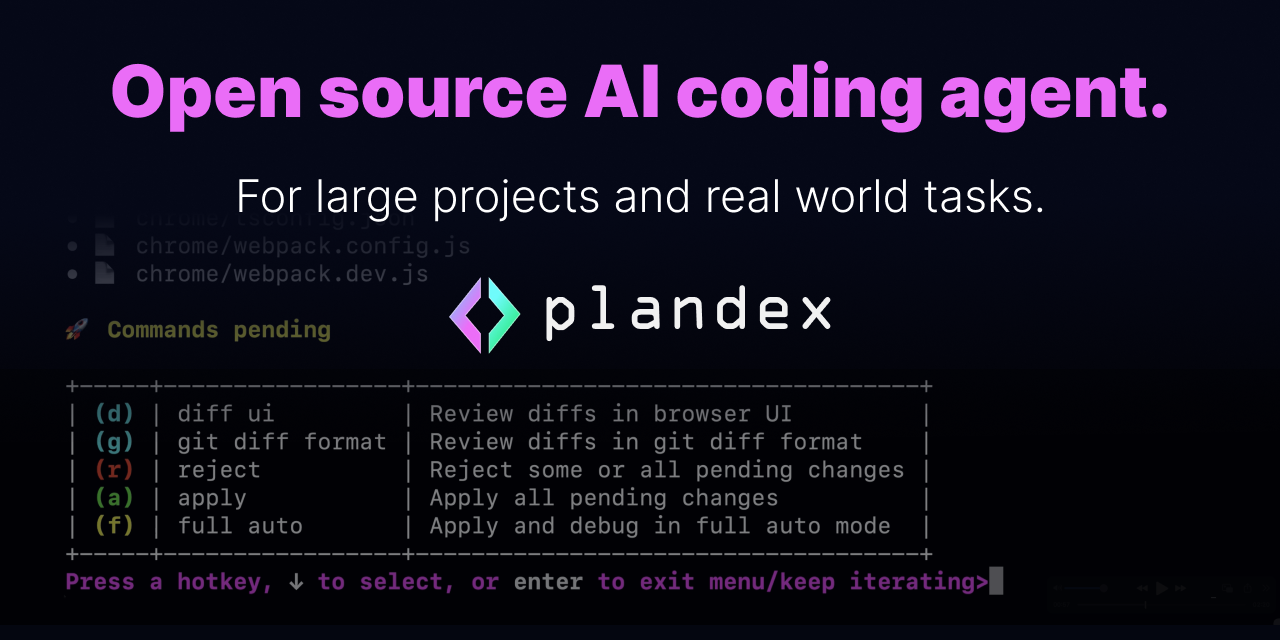

Open Interpreter

A terminal-based code execution platform for running LLMs locally with full system access.

Open Interpreter - Claude code alternative

Open Interpreter allows large language models to execute code directly on your local machine. Users interact through a ChatGPT-like terminal interface or Python API. The tool provides natural language access to computer capabilities including creating and editing files, controlling browsers, and analyzing datasets. Solo developers prefer it when they need unrestricted local execution without vendor constraints or runtime limits.

Strengths

- Full internet access and unlimited file size/runtime compared to hosted alternatives

- Supports 100+ language models through LiteLLM integration including GPT-4, Claude, and local models via Ollama/LM Studio

- Executes code in Python, JavaScript, Shell and additional programming languages

- Open-source under AGPL-3.0 license with over 50,000 GitHub stars

- Can run completely offline with local models for privacy-sensitive work

- Built-in conversation history and streaming responses for interactive sessions

Weaknesses

- High LLM costs reported when using cloud APIs; users accumulated $20 in 15 minutes with GPT-4

- Executes code in local environment creating potential data loss or security risks

- Default context window limited to 3,000 tokens in local mode

- Hardware device (01 Light) cancelled; team refunded orders to focus on software

- Terminal-first interface may present learning curve for GUI-focused developers

Best for

Developers needing unrestricted local code execution, privacy-conscious users, and those wanting model flexibility without vendor lock-in.

Pricing plans

- Core Tool — Free — Open-source software; pay only for LLM API usage if using cloud models

- Local Models — Free — Zero marginal cost when using Ollama/LM Studio/Llamafile

- 01 Light Hardware — Discontinued — Originally $99; all pre-orders refunded as team pivoted to software-only approach

Tech details

- Type: Terminal/CLI-based code interpreter with Python API

- IDEs: Runs in terminal; can be integrated into VS Code terminal or Python scripts

- Key features: Natural language code execution, conversation history, streaming output, HTTP REST API support, YAML configuration profiles

- Privacy / hosting: Runs completely local with offline mode; all code executes on user's machine

- Models / context window: Supports 100+ models via LiteLLM; default 3,000 token context for local mode (configurable); works with GPT-4, Claude, local Llama models

When to choose this over Claude code

- You need unrestricted internet access and no file size or runtime limits

- Privacy requirements demand fully local execution without cloud dependencies

- You want model flexibility to switch between GPT-4, Claude, or local open-source models

When Claude code may be a better fit

- You prefer integrated IDE experience over terminal-based workflows

- Cost control matters; Open Interpreter with cloud APIs can accumulate charges rapidly

- You need enterprise support and managed infrastructure rather than self-hosted setup

Conclusion

Open Interpreter serves as a Claude code alternative for developers prioritizing local execution and model flexibility. The open-source tool removes hosting restrictions while providing natural language access to system capabilities. Users must manage API costs carefully and configure context windows appropriately. The platform suits privacy-focused workflows where full system access outweighs integrated IDE convenience.

Sources

- Official site: https://www.openinterpreter.com/

- Docs: https://docs.openinterpreter.com/

- Pricing: https://github.com/openinterpreter/open-interpreter

FAQ

What makes Open Interpreter different from ChatGPT Code Interpreter?

Open Interpreter runs locally without upload limits, runtime restrictions, or pre-installed package constraints. It provides full internet access and system-level control.

Can I use Open Interpreter without paying for APIs?

Yes, by configuring local models through Ollama, LM Studio, or Llamafile you can run completely free with zero API costs.

Is Open Interpreter safe to use on my production machine?

Generated code executes in your local environment creating potential security risks; consider using restricted environments like Google Colab or enabling safe mode.

Which programming languages does Open Interpreter support?

Python, JavaScript, Shell, and additional languages can be executed through the platform's exec() function.

How do I control costs when using cloud models?

Set max_budget parameters, use shorter context windows (~1,000-3,000 tokens), and monitor token usage with the %tokens command.

What happened to the 01 Light hardware device?

The Open Interpreter team cancelled hardware manufacturing, refunded all pre-orders, and shifted focus entirely to software development.