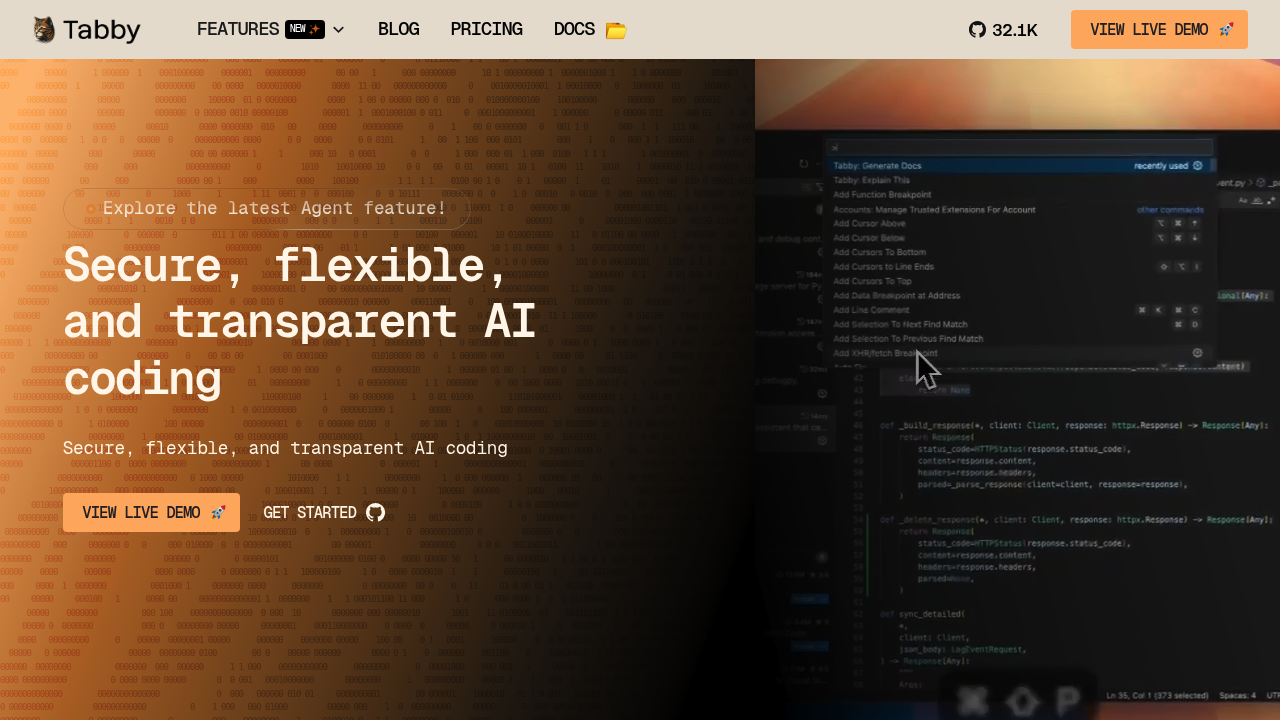

Tabby - Claude code alternative

Tabby is an open-source AI coding assistant, designed to bring the power of AI to your development workflow while keeping you in control. It offers a self-hosted and on-premises alternative to GitHub Copilot with no need for external cloud services. Solo developers prefer it for complete data privacy and customizable deployment options. Teams can set up their own LLM-powered code completion server with ease.

Strengths

- Complete control over your development workflow with self-hosted deployment

- Compatible with major coding LLMs including CodeLlama, StarCoder, and CodeGen

- Self-contained solution requiring no external database or cloud dependencies

- Supports consumer-grade GPUs for cost-effective operation

- Open-source architecture ensures software supply chain safety

- OpenAPI interface enables easy integration with existing infrastructure

Weaknesses

- Requires technical expertise for self-hosting and maintenance

- Code quality may vary, with some suggestions being junior-level implementations

- Limited cloud-based convenience compared to hosted solutions

- Smaller community and ecosystem than established alternatives

Best for

- Organizations prioritizing data privacy and custom deployment options

Pricing plans

- Open Source — Free — Up to 50 users, self-hosted deployment

- Team — $19/month per seat — Up to 50 users, flexible deployment

- Enterprise — Custom pricing — Tailored solutions with advanced features

- Tabby Cloud — Usage-based — $20 free monthly credits, pay-per-token billing

Tech details

- Type: Self-hosted AI coding assistant

- IDEs: VS Code, Cloud IDEs, Unknown for other integrations

- Key features: Code completion, answer engine, in-line chat, context provider

- Privacy / hosting: Self-hosted, local deployment, complete data control

- Models / context window: CodeLlama, StarCoder, CodeGen, context window size Unknown

When to choose this over Claude code

- You need complete control over your development data and infrastructure

- Your organization requires on-premises deployment without external cloud dependencies

- You want to use and combine different coding LLMs without vendor lock-in

When Claude code may be a better fit

- You prefer cloud-hosted solutions with minimal setup requirements

- Your team lacks infrastructure expertise for self-hosting AI models

- You need immediate access without deployment and configuration overhead

Conclusion

Tabby stands out as a powerful, privacy-focused coding assistant that puts control in developers' hands. It serves as a compelling Claude code alternative for teams requiring data sovereignty and custom deployments. The open-source nature ensures transparency and flexibility while maintaining enterprise-grade capabilities. With both free community and paid enterprise options, it scales from individual developers to large organizations.

Sources

- Official site: https://www.tabbyml.com/

- Docs: https://tabby.tabbyml.com/docs/welcome/

- Pricing: https://www.tabbyml.com/pricing

FAQ

What makes Tabby different from other AI coding assistants? Tabby is fully self-hosted and open-source, giving you complete control over your development workflow and data privacy. Unlike cloud-based alternatives, it runs entirely on your infrastructure.

Can Tabby run on consumer hardware? Yes, Tabby supports consumer-grade GPUs and is designed to be self-contained without requiring expensive cloud infrastructure.

Which programming languages does Tabby support? Tabby works with major coding LLMs including CodeLlama, StarCoder, and CodeGen, which support multiple programming languages. Specific language support depends on the chosen model.

Is there a free version of Tabby available? Yes, Tabby offers a free Open Source plan supporting up to 50 users with self-hosted deployment. Tab completion features are always free with no usage limits.

How does Tabby's pricing compare to cloud alternatives? Team plans start at $19/month per seat, while the cloud version uses usage-based pricing with $20 in free monthly credits. The self-hosted model can be more cost-effective for larger teams.

What level of technical expertise is needed to deploy Tabby? Tabby requires technical knowledge for self-hosting setup, though it's designed to be self-contained with an OpenAPI interface for easier integration. The deployment complexity depends on your infrastructure requirements.